Screenshots from the Hololens Presentation.

Some comments to come.

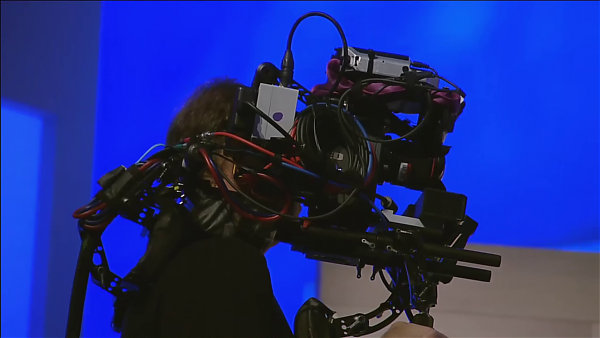

They note at the start the stream is seeing though a specially rigged camera. This is probably because people spotted this last time and thought the whole thing was fake, rather then merely the impossibility of filming though a real Hololens device.

That said, its also rather likely the view we see though this camera is composed unrealistically well – as overlaying footage onto other footage is easier then overlaying footage onto the eye.

(For those still thinking Hololens is completely a concept rather then a working device, might want to bare in mind Microsoft have hundreds of the HMDs and people will be getting hands on on them over the next few days at Build)

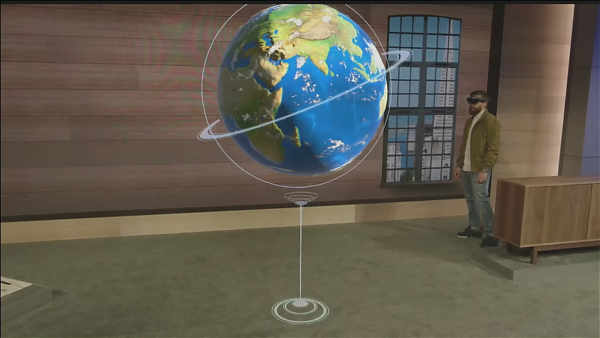

The demo opens with a spinning globe, nothing remarkable.

With a gesture from the hand the room seems to be “scanned” as it traditions to a more filled

Hololens environment. While this of course isnt a real scan, that seems very likely the real mesh that Hololens generates in order to position itself and occlude virtual objects behind other objects.

Look carefully and see the pattern of triangles conforming more or less to the real environment.

An open question perhaps is how long did it take the Hololens to generate this mesh – do you have to walk around a bit to configure it to your room? Of course, any setup like that wouldn’t be shown on a stage demo

Turns to see the room with virtual objects.

Turns back to show a virtual dog.

Unfortunately not Densuke.

Another gesture and “Daren” opens a menu – described just as the start menu.

Two things to note here;

1. The overlay very clearly has a much darker outline then the highlight in the background.

This still seems impossible. You cant overlay black by projection.

I suspect, sadly, this means the footage we are seeing is not even close to what the guys seeing in terms of contrast and clarity.

2. If you click to enlarge the picture you will see a cursor with a square box around it. This seemed to follow Darens head movements and selected the app. It wasnt confirmed by Microsoft, but it seems this is how they are thinking selecting menus on UIs.

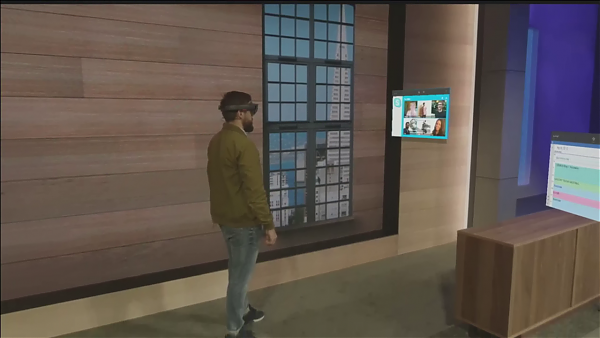

With Skype now selected, Daren turns around..

..and walks back. The app is positioned relative to him and moves with him.

And pins the app to the wall.

Apparently all Windows10 Universal apps can be pinned in this way.

We pan down to another Hololens app. All these are separate apps running at once, fully animated.

Full true AR multitasking. Slick and unique.

Daren looks at some trailers (Mission Impossible I believe) while the other Hololens apps still run

He gets up, tells the app to “Follow Me”, and it does so.

Voice Command for unpinning I assume. This wasn’t made explicit – so far everything else was gestures.

With another hand gesture he makes the resize gizmos appear on the window…

…and stretches it to fill a wall.

Its noted that all Windows10 Universal apps have this feature. Its just like resizing a Window on a normal monitor really.

Its also very much like VidScreens in ReBoot.

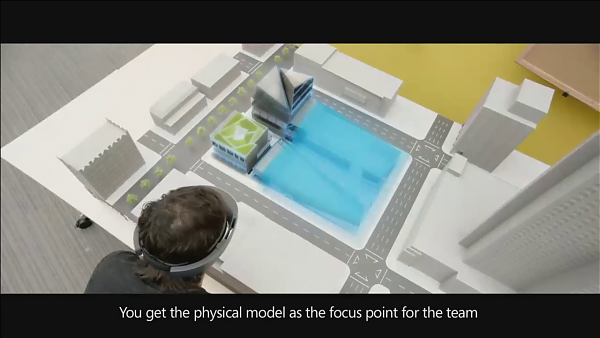

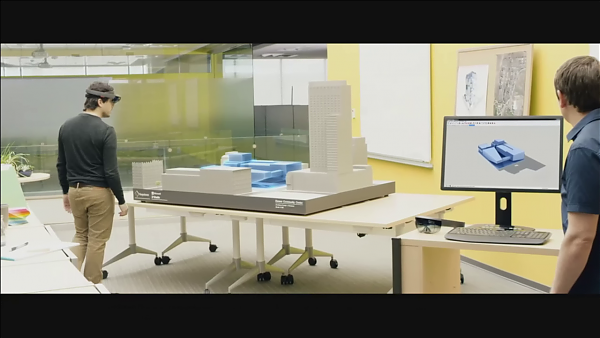

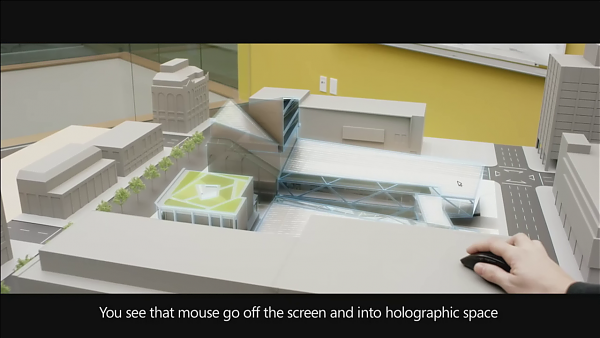

Next up is a premade video showing ArchVis use-case.

Using it with a full PC – despite Hololens itself being standalone.

Also of note; Using Hololens with a mouse. (For the motion control skeptics like myself this is fantastic news….still would prefer a 3d stylus mind)

Viewing Hololens onsight at a buildings works. Great use-case, but looks completely mocked footage now.

Hololens helping you spot that pillars in front of doors is bad design.

Next up is a physical tour of the hardware. Mostly beauty shots, but of note is the ability to adjust the size to fit your head better.

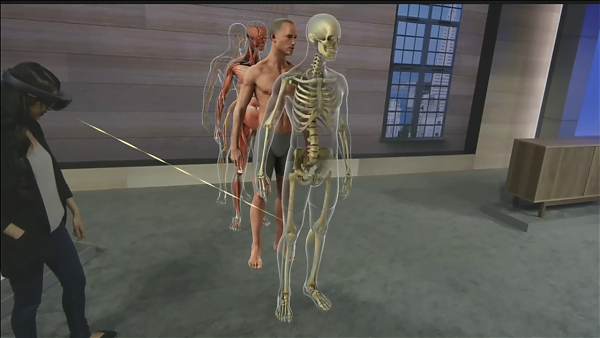

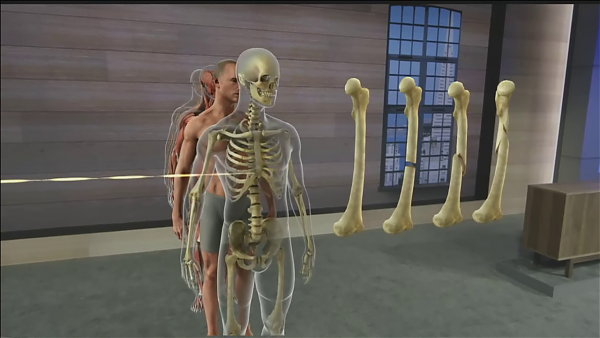

Next up is medical teaching use-case demo, this time back to the stage not a premade video.

Seems to show gaze tracking, but wasn’t explicitly mentioned.

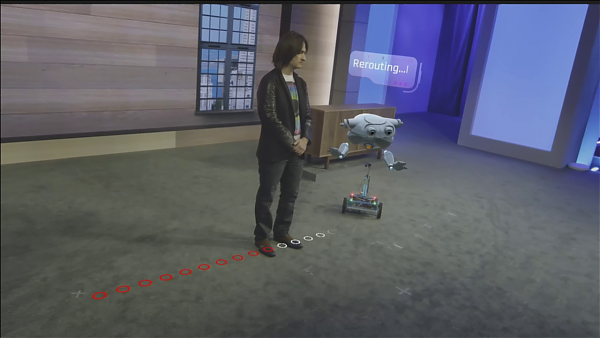

Next up showing how the Hololens can work together with physical things, such as this robot.

The robot changed LED colour based on the Hololen interface being selected around it.

More significantly though, they demo’d telling the (real) robot to go somewhere. Its AR overlay stayed sycned.

Seeing a new path, note cursor on floor and hand gesture.

Look at her head and hand – I believe its plotting the ray between the two and the cursor is on the first surface it hits.

The robot is given a new path by setting this destinition.

However, the other guy gets in the way.

The Hololens is giving the robot the data needed to pathfind – in near real time with updates.

This is significantly cool as it means apps have at least some access to the room scan the Hololens has, and that data updates if the room changes.

…it plots a course around using this new data.

Comments

There are currently no comments on this article.

Comment